The fastest WebSocket implementation

This post measures the performance of wtx and other projects to figure out which one is faster. If any metrics or procedures described here are flawed, feel free to point them out.

Metrics

Differently from autobahn, which is the standard automated test suite that verifies client and server implementations, there isn't an easy and comprehensive benchmark suite for the WebSocket Protocol (well at least I couldn't find any) so let's create one.

Enter ws-bench! Three parameters that result in reasonable combinations trying to replicate what could possibly happen in a production environment are applied to listening servers by the program.

| Low | Medium | High | |

|---|---|---|---|

| Number of connections | 1 | 128 | 256 |

| Number of messages | 1 | 64 | 128 |

| Transfer memory (KiB) | 1 | 64 | 128 |

Number of connections

Tells how well a server can handle multiple connections concurrently. For example, there are single-thread, concurrent single-thread or multi-thread implementations.

In some cases this metric is also influenced by the underlying mechanism responsible for scheduling the execution of workers/tasks.

Number of messages

When a payload is very large, it is possible to send it using several sequential frames where each frame holds a portion of the original payload. This frame formed by different smaller frames is called here "message" and the number of "messages" can measure the implementation's ability of handling their encoding or decoding as well as the network latency (round trip time).

Transfer memory

It is not rare to hear that the cost of a round trip is higher than the cost of allocating memory, which is generally true. Unfortunately, based on this concept some individuals prefer to indiscriminately call the heap allocator without investigating whether such a thing might incur a negative performance impact.

Frames tend to be small but there are applications using WebSocket to transfer different types of real-time blobs. That said, let's investigate the impact of larger payload sizes.

Investigation

| Project | Language | Fork | Application |

|---|---|---|---|

| uWebSockets | C++ | https://github.com/c410-f3r/uWebSockets | examples/EchoServer.cpp |

| fastwebsockets | Rust | https://github.com/c410-f3r/fastwebsockets | examples/echo_server.rs |

| gorilla/websocket | Go | https://github.com/c410-f3r/websocket | examples/echo/server.go |

| tokio-tungstenite | Rust | https://github.com/c410-f3r/tokio-tungstenite | examples/echo-server.rs |

| websockets | Python | https://github.com/c410-f3r/regular-crates/blob/wtx-0.5.2/ws-bench/_websockets.py | _websockets.py |

| wtx | Rust | https://github.com/c410-f3r/wtx | wtx-instances/generic-examples/web-socket-server.rs |

In order to try to ensure some level of fairness, all six projects had their files modified to remove writes to stdout, impose optimized builds where applicable and remove SSL or compression configurations.

The benchmark procedure is quite simple: servers listen to incoming requests on different ports, the ws-bench binary is called with all uris and the resulting chart is generated. In fact, everything is declared in this bash script.

| Chart | Connections | Messages | Memory | fastwebsockets | gorilla/websockets | tokio_tungstenite | uWebsockets | websockets | wtx_hyper | wtx-_raw_async_std | wtx_raw_tokio |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Chart | low | mid | high | 104 | 273 | 102 | 88 | 232 | 64❗ | 67 | 65 |

| Chart | low | high | low | 5759 | 5783 | 5784 | 5760 | 5728❗ | 5802 | 5764 | 5736 |

| Chart | low | high | mid | 336 | 546 | 235 | 192 | 526 | 160 | 163 | 159❗ |

| Chart | low | high | high | 331 | 960 | 360 | 325 | 725 | 250 | 282 | 249❗ |

| Chart | mid | low | high | 18 | 22 | 18 | 15 | 31 | 14 | 12❗ | 13 |

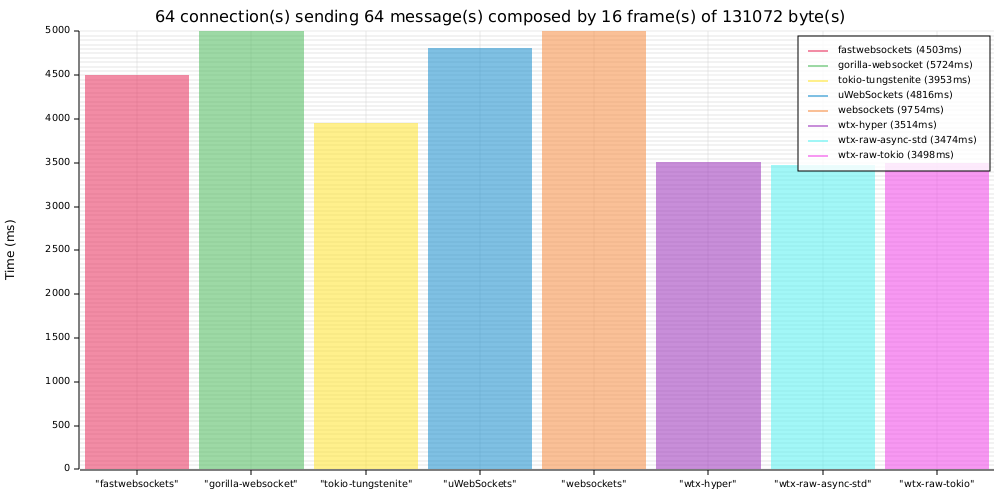

| Chart | mid | mid | high | 4503 | 5724 | 3959 | 4816 | 9754 | 3514 | 3474❗ | 3498 |

| Chart | mid | high | low | 5684❗ | 5800 | 5721 | 5687 | 6681 | 5689 | 5764 | 5684❗ |

| Chart | mid | high | mid | 11020 | 13735 | 8365 | 9072 | 19874 | 6937 | 6895❗ | 6933 |

| Chart | mid | high | high | 19808 | 23178 | 15471 | 19821 | 38327 | 13759 | 13693❗ | 13749 |

| Chart | high | low | low | 52 | 71 | 98 | 46 | 1053 | 52 | 41❗ | 88 |

| Chart | high | low | mid | 84 | 86 | 74 | 51 | 1043 | 60 | 50 | 48❗ |

| Chart | high | low | high | 124 | 82 | 78 | 57 | 1059 | 55 | 54❗ | 58 |

| Chart | high | mid | low | 2987 | 3051 | 3027 | 2955 | 5071 | 2981 | 3000 | 2942❗ |

| Chart | high | mid | mid | 20150 | 21475 | 14593 | 18931 | 41368 | 11172 | 10987❗ | 11268 |

| Chart | high | mid | high | 41846 | 43514 | 20706 | 21779 | 41091 | 16118 | 15555 | 15524❗ |

| Chart | high | high | low | 5828 | 5941 | 5830 | 5790 | 9400 | 5778❗ | 5877 | 5808 |

| Chart | high | high | mid | 53756 | 55063 | 44829 | 47312 | 107758 | 36628 | 34333❗ | 37000 |

Tested with a notebook composed by i5-1135G7, 256GB SSD and 32GB RAM. Combinations of low and mid were discarded for showing almost zero values in all instances.

soketto and ws-tools were initially tested but eventually abandoned at a later stage due to frequent shutdowns. I didn't dive into the root causes but they can return back once the underlying problems are fixed by the authors.

Result

wtx as a whole scored an average amount of 6350.31 ms, followed by tokio-tungstenite with 7602.94 ms, uWebSockets with 8393.94 ms, fastwebsockets with 10140.58 ms, gorilla/websockets with 10900.23 ms and finally websockets with 17042.41 ms.

websockets performed the worst in several tests but it is unknown whether such behavior could be improved. Perhaps some modification to the _weboskcets.py file? Let me know if it is the case.

Among the three metrics, the number of messages was the most impactful because the client always verifies the content sent back from a server leading a sequential-like behavior. Perhaps the number of messages is not a good parameter for benchmarking purposes.

To finish, wtx was faster in all tests and can indeed be rotulated as the fastest WebSocket implementation at least according to the presented projects and methodology.